Testing the Limits of AI Video with Google Veo 3.1: Incremental Update or Major Shift?

Google released their newest version of Flow, their AI video generation tool, here's everything you need to know

Google Veo 3.1 is finally here!

It’s not the 4.0 model release I was hoping for, but it is a substantial update to their powerful and popular tool, Google Flow.

I’ve personally been looking forward to the next Google Flow update for months now. It has felt long overdue to me, especially with the Sora 2 release from OpenAI.

The Sora 2 update (huge thanks to for providing access!) has taken the world by storm, so Google needed to follow suit to stay up to date and in the game.

Behold: Veo. 3.1.

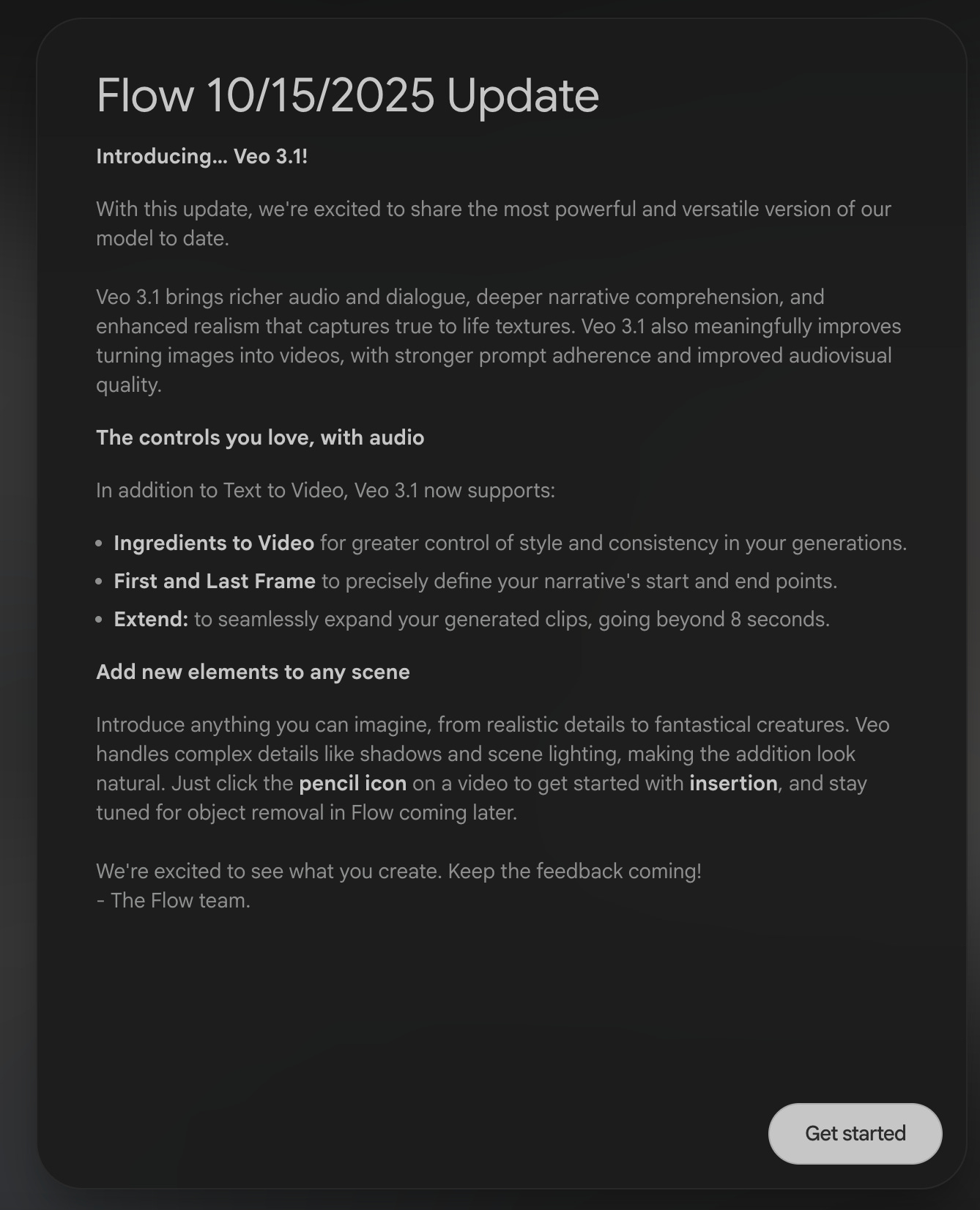

Here is the update screen from Google:

Google Veo 3.1 Update Breakdown

Let’s look at each update here and break it down, one by one.

I’ll also add some context with my own experience from Veo 2 and Veo 3, and how they compare.

Overall Improvements

Veo 3.1 brings richer audio and dialogue, deeper narrative comprehension, and enhanced realism that captures true to life textures. Veo 3.1 also meaningfully improves turning images into videos, with stronger prompt adherence and improved audiovisual quality.

I noticed right away that the audio sounds more realistic, and the characters’ voices feel more human and less processed.

If you’ve heard a lot of AI voices, then you know what I mean by “processed” - there’s been a sort of inhuman clip to the voices so far that Veo 3.1 seems to have improved.

Google says that this update has stronger prompt adherence. In my 3.1 experience so far, this very much depends on what you’re asking it to do.

If I’m not specific with the prompt, it will have a wider range of outputs in 3.1 than in previous models, but the quality of the outputs seems to have taken a step back in some contexts.

One example: there are more camera movements and shot cuts if you aren’t explicit about how many you want (or don’t want).

If I describe a mascot scene for instance, but I don’t tell it to keep the camera static throughout, 3.1 gives the generated scenes more cuts, typically zooming into one of the character’s faces while they speak.

Depending on the nature of the scene, you may or may not want those kinds of cuts.

More Control Tools?

Google says 3.1 has more tools for greater control. Let’s break these claims down too:

Ingredients to Video for greater control of style and consistency in your generations.

First and Last Frame to precisely define your narrative’s start and end points.

Extend: to seamlessly expand your generated clips, going beyond 8 seconds.

Ingredients to Video

If you follow me on Instagram or TikTok, you know that mascots are my thing.

On a recent video prompt, I wanted to create the mascot for my Wikipedia video.

99% of the time, I will just describe the mascot in detail to get what I want.

With this new update, however, I tried to use the Wikipedia logo as an “ingredient” to get closer to the real thing.

And lo and behold… it worked! Mostly.

Yes, it was a better output than not including the logo image as a reference “ingredient”.

But it wasn’t exactly mind-blowing.

Ultimately, neither one looked quite like I had envisioned it, so I went with a different mascot style entirely for Wikipedia.

First and Last Frame & Extend

One thing that’s been a major improvement is the first frame setting.

This allows you to take a screenshot from the end of your current video segment (which is automatically saved in Google Flow!) and make that image the beginning of your next 8-second segment.

With only 8 seconds produced at a time, this is very important, because it allows you to get the consistency you want across the entire video. I used this on the Wikipedia video as well.

I wanted the Wiki mascot to have a much longer monologue (than I usually do) before the girl spoke, and then I wanted him to respond to her question afterward.

The whole script would take about 30 seconds, but that would require 4 separate AI video generations.

In previous versions, you would have to use Veo 2 for the first frame setting to work.

What this meant in practice was that your Veo 3 segment (the first 8 seconds) and your Veo 2 segment (the next 8 seconds) would look mostly similar, but the quality of the Veo 2 output would be noticeably lower, especially if you knew what to look for.

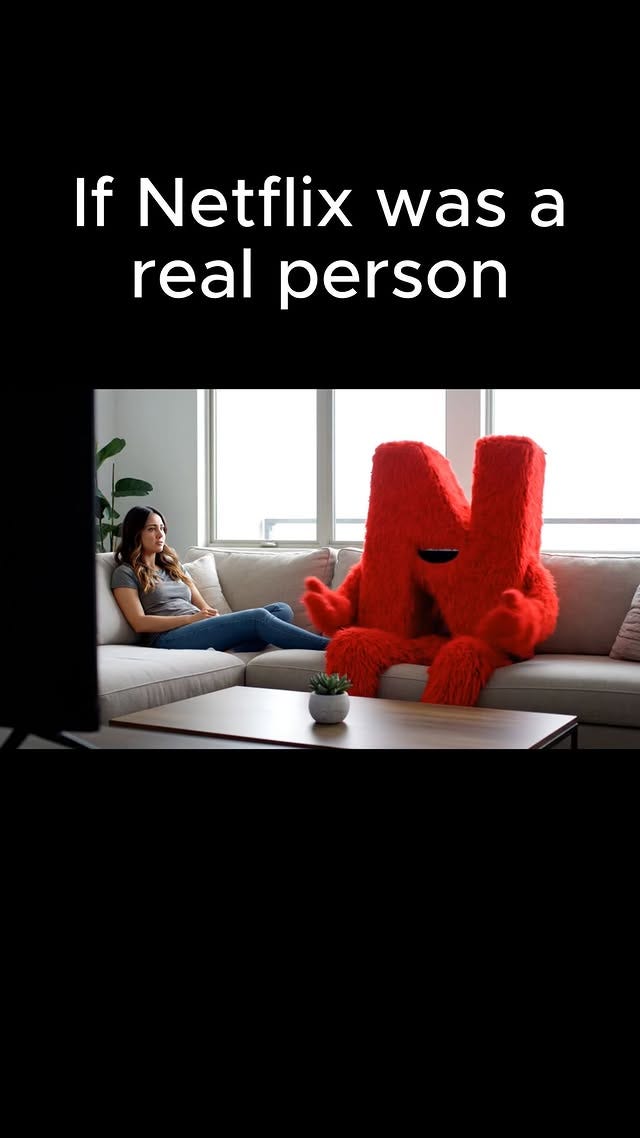

If you look closely in my Netflix mascot video, you might notice that the quality in the second half of the video is almost imperceptibly lower than the first half.

That’s a direct result of having to use Veo 3 for the first half, and Veo 2 for the second half.

Here’s my Netflix mascot video below. See if you can spot where it shifts from Veo 3 to Veo 2.

There’s a nearly invisible shift from the Veo 3 to the Veo 2 for the casual viewer…

But, for me, creating those videos, I want them to be as high a quality as possible, from start to finish, every single time.

Veo 3.1 allows me to do that on every video now.

Some issues do remain…

One quick example: if a mascot in my video has a certain type of teeth or tongue in its mouth, if the saved first frame does not show the mouth being open, I might get a slightly different mascot mouth design.

The same is true of making sure that the mascot’s name (which I usually have written on their costume) is fully visible in the first frame setting as well. Otherwise, the Veo 3.1 follow-up output might have the wrong name, or misspelled, etc.

True, this is pretty small potatoes in the grand scheme of things, but just something to be aware of if you decide to try it out for yourself.

Another quick way to do this is with the new Extend feature, which essentially does the same thing without having to take a screenshot.

However, again, you have to make sure that the final frame shows all the important details that will need be included, but it works off the same principle of last frame to first frame.

All of this takes place in the Scenebuilder feature, which is Google Flow’s built-in editor tool, a very rudimentary timeline editor.

Think Final Cut or CapCut, but without any of the editing features you’re used to.

A featured I don’t use much is the last frame setting, in which you can tell Veo 3.1 what you want the last shot to show.

This can be especially useful if you have a climactic moment that needs to show specific elements in your video.

For my mascot videos, the script and dialogue is the most important element and where the comedy lies, in conjunction with the mascot’s personality and costume design.

Because of that, I rarely need to indicate what the final frame should show, so long as the video is funny and the humor comes through like I intend it to.

Pencil and Insertion Tool

The pencil/insertion tool is a new feature that Google Flow released to allow you to add in items into videos you have already generated.

In the screenshot below, I asked Google Flow to add a glass of beer to the mascot’s hand.

Here’s the before and after:

You can see that it did add in the beer, exactly where I asked it to.

In another video, I asked it to add a hat to one of the characters. But it didn’t fit the person’s head, and it wasn’t backwards like I requested either. So these features are still a work-in-progress for sure.

This is just a couple of examples, but it shows what I’m referring to with the new Pencil Insertion feature.

They noted that object removal is coming soon, which will be a huge step forward.

Right now, we can add objects into videos, but we can’t take anything out…yet.

Closing Thoughts

Overall, Veo 3.1 is a definite advance over 3.0, and a leap forward from 2.0.

So, we’re moving ahead as AI video generators go, and the big big things that we need to see — character consistency and object insertion/removal — are getting better in each major release.

This is all super exciting and I can’t wait to see what comes out next.

Thanks for reading!